By Antonios Danelakis, Postdoc Researcher, Dept. Computer Science, NTNU

14th April 2021

Before its automation became commonplace, face recognition at border control points was performed by a law enforcement agent manually using typical 2-dimensional (2D) frontal facial images that came either from still captures or from video footages.

Then, computer science automated the process of face recognition at border control points by providing appropriate face recognition algorithms.

Up until now, these algorithms have also used the same initial 2D facial data as basis for their recognition results. Recently, a new trend is utilizing 3-dimensional (3D) facial data. The present blog aims at presenting the nature of such data and analyzes different aspects that need to be defined in order for this trend to move from the labs of scientific institutes to regular practice.

Before continuing, it is critical to note that the D4FLY project is not designing tools for ubiquitous surveillance through facial recognition. All of the project’s tools are meant to be implemented at border crossing points where travelers have already consented to having their identity authenticated by border authorities. As such, the tools under development will not add additional sites of surveillance to society. Rather, they seek to make current identify verification practices more accurate and efficient. For more details on the ethical, privacy and data protection elements of the project, please see https://d4fly.eu/ethics-privacy-and-data-protection-in-d4fly/ .

What is the 3D Face?

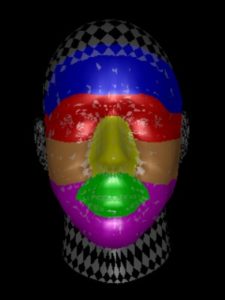

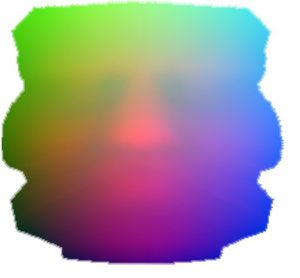

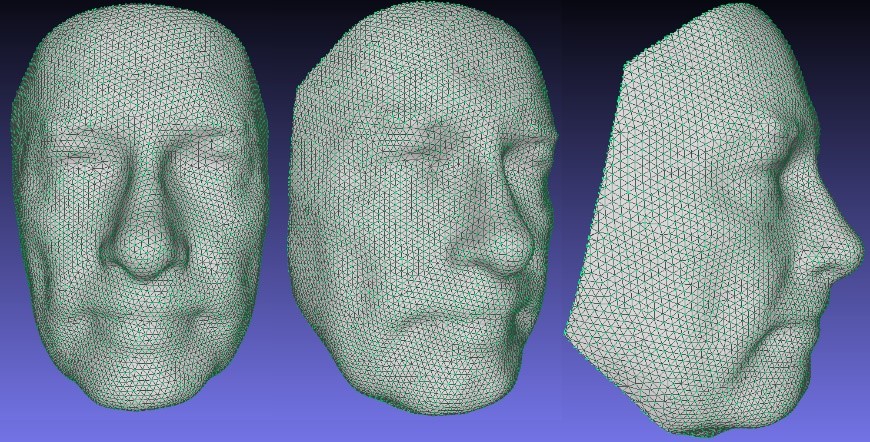

The 3D facial data are totally different than the 2D facial data. A 3D face consists of a set of points in the 3D space (vertices). The aforementioned points are accompanied with an appropriate triangularization. That is a set of triangles (facets) formed by the initial vertices. 3D facial data can contain many more attributes than just vertices and facets (i.e. color, material, normal etc.) but, within the scope of this blog, we would like to keep it simple. The given vertices and facets describe the entire face instead of just a frontal pose of it, as in the case of the 2D data. The figure at the side provides the reader with a better understanding of the 3D data.

Why 3d Face instead of typical 2D Face?

To begin with, 2D face and 3D face data can always be used in a complementary manner. Nonetheless, 3D face recognition has the potential to improve reliability and security over state-of-the-art 2D face recognition systems. That is because 2D face recognition systems can be manipulated or spoofed with printed photographs or videos, masks etc. A 2D face-based recognition system is also resilient to pose and illumination variations as well as facial occlusions of any kind (i.e. glasses, scarfs, caps, etc.).

What are the challenges to overcome?

At the moment we have everything we need for handling 3D face data. We have cheap storage space for saving the data, reliable algorithms that can utilize the data for 3D face recognition, and the computational power to perform all related operations in real-time. So, that is everything, right? Well, not exactly. The 3D data cannot be captured by using a convenient setup of affordable off-the-shelf devices, like the typical cameras for the 2D data. And this is the main challenge to overcome here!

There are some available options in order to capture 3D facial data. One option would be to use a number of typical 2D cameras (the more the merrier) in order to capture images of a face from different angles. The resultant captures could be then used for reconstructing the 3D data. Obviously, that is not feasible for border control points or for on-the-move captures. Another option would be to use a 3D scanner. This sensor consists of two units that occupy a lot of space, needs manual handling, and it is still very expensive. That makes it inappropriate for border control points as well. In order to overcome this issue in the context of D4FLY we use a pioneer sensor manufactured by Raytrix, a partner of D4FLY consortium. This sensor is based on a microlens array which stores the face of an individual multiple times. Each instance of the face is considered as a separate capture from a slightly different angle, just like the case of using multiple typical cameras. The captures are then used for reconstructing the 3D face. But still, the cost of this sensor is high, and the on-the-move captures can be a bit tricky. To sum up, the capturing devices need to be further improved in terms of cost and quality.

Are we getting there?

Although predictions can be risky and difficult, it is expected that once the issues with capturing the 3D face are totally resolved, then the 3D face sensors will gradually replace the typical cameras that are used up to date. This requires at least a decade of research work on the sensors. The fact that the new ISO/IEC DIS 39794-5 standard for biometric passports, now contains 3D face data as well, just confirms our expectations.

Mail: antonios.danelakis@ntnu.no